The

AI Delusion

by Gary Smith

Oxford University Press, 256 pp., USD $27.95.

“I for one welcome our new computer overlords” – Ken Jennings, Jeopardy champion

In The AI Delusion, economist Gary Smith provides a warning for mankind. However, it is not a warning about machines, it is about ourselves and our tendency to trust machines to make decisions for us. Artificial Intelligence is fantastic for limited and focused tasks but is not close to actual general intelligence. Professor Smith points out that machines, for which all patterns in data appear equally meaningful, have none of the real-world understanding required to filter out nonsense. Even worse is the fact that many of the new algorithms hide their details so we have no way of determining if the output is reasonable. Even human beings, when not engaging their critical thinking skills, mistakenly draw conclusions from meaningless patterns. If we blindly trust conclusions from machines, we are falling for the AI delusion and will certainly suffer because of it.

The Real Danger of Artificial Intelligence

Speculators about the future of artificial intelligence (AI) tend to fall into one of two camps. The first group believes that, when hardware reaches the same level of complexity and processing speed as a human brain, machines will quickly surpass human-level intelligence and lead us into a new age of scientific discovery and inventions. As part of his final answer of the man vs. machine match against IBM’s Watson, former Jeopardy! champion Ken Jennings seemed to indicate that he was in this first camp by welcoming our computer overlords. The impressive AI system, which beat him by answering natural language questions and appeared to understand and solve riddles, made fully intelligent machines seem to be right around the corner.[1]

The second camp dreads an AI revolution. Having grown up on sci-fi movies, like the Matrix and the Terminator, they worry that superior intelligence will lead machines to decide the fate of mankind, their only potential threat, in a microsecond. Alternatively, and more realistically, they see a risk that AI machines may simply not value or consider human life at all and unintentionally extinguish us in their single-minded pursuit of programmed tasks. Machines may find a creative solution that people did not anticipate and endanger us all.

Gary Smith convincingly presents his belief that neither of these views is correct. If achieving true AI is like landing on the moon, all of the impressive recent advances are more like tree-planting than rocket-building. New advancements are akin to adding branches to the tree, and getting us higher off the ground, but not on the path towards the moon.

Humanity has turned away from the exceedingly difficult task of trying to mimic the way the brain works and towards the easier applications (such as spell-checkers and search engines) that leverage what computers do well. These new applications are useful and profitable but, if the goal is for machines to be capable of understanding the world, we need to start over with a new approach to AI. Machines gaining human-like intelligence is not something around the corner unless we start building rockets.

The AI Delusion warns us that the real danger of AI is not that computers are smarter than we are but that we think computers are smarter than we are. If people stop thinking critically and let machines make important decisions for them, like determining jail sentences or hiring job candidates, any one of us may soon become a victim of an arbitrary and unjustifiable conclusion. It is not that computers are not incredibly useful; they allow us to do in minutes what might take a lifetime without them. The point is that, while current AI is artificial, it is not intelligent.

The Illusion of Intelligence

Over the years I have learned a tremendous amount from Gary Smith’s books and his way of thinking. It seems like a strange compliment but he is deeply familiar with randomness. He knows how random variables cluster, how long streaks can be expected to continue, and what random walks look like. He can examine a seemingly interesting statistical fluke in the data and conclude “you would find that same pattern with random numbers!” and then prove it by running a simulation. He uses this tactic often in his books and it is extremely effective. How can you claim that a pattern is meaningful when he just created it out of thin air?

The AI Delusion begins with a painful example for the political left of the United States. Smith points a finger at the over-reliance on automated number-crunching for the epic failure of Hillary Clinton’s presidential campaign in 2016. Clinton had a secret weapon: a predictive modeling system. Based on historical data, the system recommended campaigning in Arizona in an attempt for a blowout victory while ignoring states that Democrats won in prior years. The signs were there that the plan needed adjusting: her narrow victory over Bernie Sanders, the enthusiastic crowds turning out for Trump, and the discontent of blue-collar voters who could no longer be taken for granted. However, since her computer system did not measure those things, they were considered unimportant. Clinton should have heeded the advice of sociologist William Bruce Cameron: “not everything that can be counted counts, and not everything that counts can be counted.” Blindly trusting machines to have the answers can have real consequences. When it comes to making predictions about the real world, machines have blind spots, and we need to watch for them.

In contrast, machines are spectacular at playing games; they can beat the best humans at practically every game there is. Games like chess were traditionally considered proxies for intelligence, so if computers can crush us, does that mean that they are intelligent? As Smith reviews various games, he shows that the perception that machines are smart is an illusion. Software developers take advantage of mind-boggling processing speed and storage capabilities to create programs that appear smart. They focus on a narrow task, in a purified environment of digital information, and accomplish it in a way that humans never would. Smith points out the truth behind the old joke that a computer can make a perfect chess move while it is in a room that is on fire; machines do not think, they just follow instructions. The fact that they’re good at some things does not mean they will be good at everything.

In the early days of AI, Douglas Hofstadter, the author of the incredibly ambitious book Gödel, Escher, Bach: An Eternal Golden Braid, tackled the seemingly impossible task of replicating the way a human mind works. He later expressed disappointment as he saw the development of AI take a detour and reach for the tops of trees rather than the moon:

To me, as a fledgling [artificial intelligence] person, it was self-evident that I did not want to get involved in that trickery. It was obvious: I don’t want to be involved in passing off some fancy program’s behavior for intelligence when I know that it has nothing to do with intelligence.

A New Test for AI

The traditional test for machine intelligence is the Turing Test. It essentially asks the question: “Can a computer program fool a human questioner into thinking it is a human?” Depending on the sophistication of the questioner, the freedom to ask anything at all can pose quite a challenge for a machine. For example, most programs would be stumped by the question “Would flugly make a good name for a perfume?” The problem with this test is that it is largely a game of deception. Pre-determined responses and tactics, such as intentionally making mistakes, can fool people without representing any useful advance in intelligence. You may stump Siri with the ‘flugly’ question today, but tomorrow the comedy writers at Apple may have a witty response ready: “Sure, flidiots would love it.” This would count as the trickery Hofstadler referred to. With enough training, a program will pass the test but it would not be due to anything resembling human intelligence; it would be the result of a database of responses and a clever programmer who anticipated the questions.

Consider Scrabble legend Nigel Richards. In May 2015, Richards, who does not speak French, memorized 386,000 French words. Nine weeks later he won the first of his two French-language Scrabble World Championships. This can provide insight into how computers do similarly amazing things without actually understanding anything. Another analogy is the thought experiment from John Searle in which someone in a locked room receives and passes back messages under the door in Chinese. The person in the room does not know any Chinese; she is just following computer code that was created to pass the Turing Test in Chinese. If we accept that the person in the room following the code does not understand the questions, how can we claim that a computer running the code does?

A tougher test to evaluate machine intelligence is the Winograd Schema Challenge. Consider what the word ‘it’ refers to in the following sentences:

I can’t cut that tree down with that axe; it is too thick

I can’t cut that tree down with that axe; it is too small.

A human can easily determine that, in the first sentence, ‘it’ refers to the tree while, in the second, ‘it’ is the axe. Computers fail these types of tasks consistently because, like Nigel Richards, they do not know what words mean. They don’t know what a tree is, what an axe is, or what it means to cut something down. Oren Etzioni, a professor of computer science, asks “how can computers take over the world, if they don’t know what ‘it’ refers to in a sentence?”

One of my favorite surprises from the book is the introduction of a new test (called the Smith Test of course) for machine intelligence:

Collect 100 sets of data; for example, data on U.S. stock prices, unemployment, interest

rates, rice prices, sales of blue paint in New Zealand, and temperatures in Curtin,

Australia. Allow the computer to analyze the data in any way it wants, and then report the statistical relationships that it thinks might be useful for making predictions. The

computer passes the Smith test if a human panel concurs that the relationships selected by the computer make sense.

This test highlights the two major problems with unleashing sophisticated statistical algorithms on data. One problem is that computers do not know what they have found; they do not know anything about the real world. The other problem is that it is easy, even with random data, to find associations. That means that, when given a lot of data, what computers find will almost certainly be meaningless. Without including a critical thinker in the loop, modern knowledge discovery tools may be nothing more than noise discovery tools.

It is hard to imagine how a machine could use trickery to fake its way through a test like this. Countless examples in the book show that even humans who are not properly armed with a sense of skepticism can believe that senseless correlations have meaning:

- Students who choose a second major have better grades on average. Does this mean a struggling student should add a second major?

- Men who are married live longer than men who are divorced or single. Can men extend their lifespans by tying the knot?

- Emergency room visits on holidays are more likely to end badly. Should you postpone emergency visits until the holidays are over?

- Freeways with higher speed limits have fewer traffic fatalities. Should we raise speed limits?

- Family tension is strongly correlated with hours spent watching television. Will everyone get along better if we ditch the TV?

- People who take driver-training courses have more accidents than people who do not. Are those courses making people more reckless?

- Students who take Latin courses score higher on verbal ability. Should everyone take Latin?

Many people incorrectly assume causal relationships in questions like these and unthinking machines would certainly do so as well. Confounding variables only become clear when a skeptical mind is put to use. Only after thinking carefully about what the data is telling us, and considering alternate reasons why there might be an association, can we come to reasonable conclusions.

Gary Smith’s specialty is teaching his readers how to spot nonsense. I’m reminded of a memorable speech from the movie My Cousin Vinny[2]:

Vinny: The D.A.’s got to build a case. Building a case is like building a house. Each piece of evidence is just another building block. He wants to make a brick bunker of a building. He wants to use serious, solid-looking bricks, like, like these, right? [puts his hand on the wall]

Bill: Right.

Vinny: Let me show you something.

[He holds up a playing card, with the face toward Billy]

Vinny: He’s going to show you the bricks. He’ll show you they got straight sides. He’ll show you how they got the right shape. He’ll show them to you in a very special way, so that they appear to have everything a brick should have. But there’s one thing he’s not gonna show you. [He turns the card, so that its edge is toward Billy]

Vinny: When you look at the bricks from the right angle, they’re as thin as this playing card. His whole case is an illusion, a magic trick…Nobody – I mean nobody – pulls the wool over the eyes of a Gambini.

Professor Smith endeavors to make Gambinis out of us all. After reading his books, you are taught to look at claims from the right angle and see for yourself if they are paper thin. In the case of The AI Delusion, the appearance of machine intelligence is the magic trick that is exposed. True AI would be a critical thinker with the capability to separate the meaningful from the spurious, the sensible from the senseless, and causation from correlation.

Data-Mining for Nonsense

The mindless ransacking of data and looking for patterns and correlations, which is what AI does best, is at the heart of the replication crisis in science. Finding an association in a large dataset just means that you looked, nothing more. Professor Smith writes about a conversation he had with a social psychologist at Sci Foo 2015, an annual gathering of scientists and writers at Googleplex. She expressed admiration for Daryl Bem, a social psychologist, who openly endorsed blindly exploring data to find interesting patterns. Bem is known, not surprisingly, for outlandish claims that have been refuted by other researchers. She also praised Diederik Stapel who has even admitted that he made up data. Smith changed the subject. The following day a prominent social psychologist said that his field is the poster-child for irreproducible research and that his default assumption is that every new study is false. That sounds like a good bet. Unfortunately, adding more data and high-tech software that specializes in discovering patterns will make the problem worse, not better.

To support the idea that computer-driven analysis is trusted more than human-driven analysis, Smith recounts a story about an economist in 1981 who was being paid by the Reagan administration to develop a computer simulation that predicted that tax revenue would increase if tax rates were reduced. He was unsuccessful no matter how much the computer tortured the data. He approached Professor Smith for help and was not happy when Smith advised him to simply accept that reducing tax rates would reduce tax revenue (which is, in fact, what happened). The effort to find a way to get a computer program to provide the prediction is telling; even back in the 80s people considered computers to be authoritative. If the machine says it, it must be true.

Modern day computers can torture data like never before. A Dartmouth graduate student named Craig Bennett used an MRI machine to search for brain activity in a salmon as it was shown pictures and asked questions. The sophisticated statistical software identified some areas of activity! Did I mention that the fish was dead? Craig grabbed it from a local market. There were so many areas (voxels) being examined by the machine that it would inevitably find some false positives. This was the point of the study; people should be skeptical of findings that come from a search through piles of data. Craig published his research and won the Ig Nobel Prize, which is awarded each year to “honor achievements that first make people laugh, and then make them think.” The lesson for the readers of AI Delusion is that anyone can read the paper and chuckle at the absurdity of the idea that the brain of a dead fish would respond to photographs but the most powerful and complex neural net in the world, given the same data, would not question it.

One of the biggest surprises in the book was the effective criticism of popular statistical procedures including stepwise regression, ridge regression, neural networks, and principal components analysis. Anyone under the illusion that these procedures will protect them against the downsides of data-mining is disabused of that notion. Professor Smith knows their histories and technical details intimately. Ridge regression, in particular, takes a beating as a “discredited” approach. Smith delivers the checkmate, in true Smithian style, by sending four equivalent representations of Milton Friedman’s model of consumer spending to a ridge regression specialist to analyze:

I did not tell him that the data were for equivalent equations. The flimsy foundation of ridge regression was confirmed in my mind by the fact that he did not ask me anything about the data he was analyzing. They were just numbers to be manipulated. He was just like a computer. Numbers are numbers. Who knows or cares what they represent? He estimated the models and returned four contradictory sets of ridge estimates.

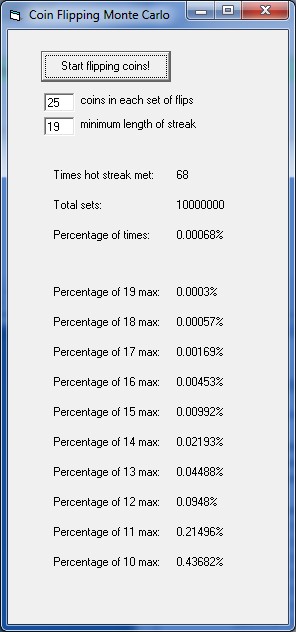

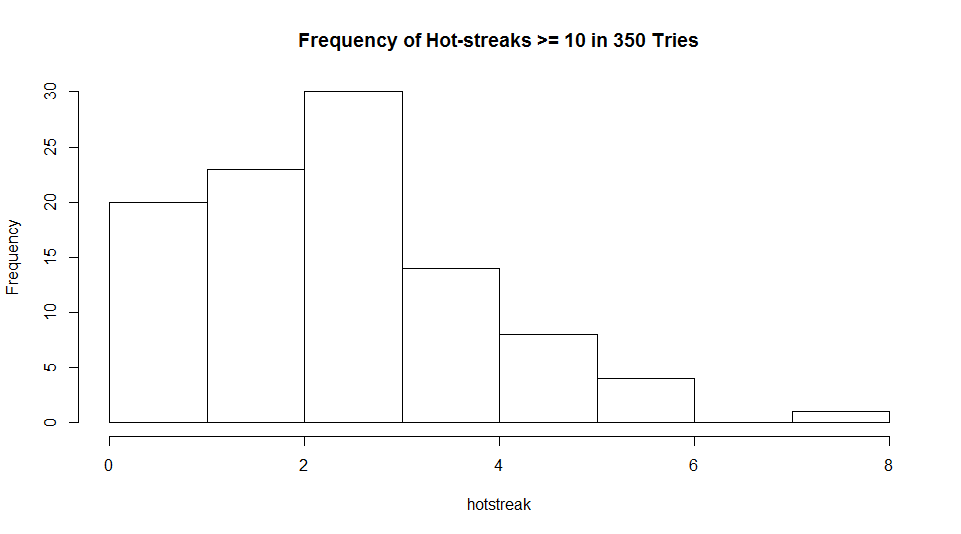

Smith played a similar prank on a technical stock analyst. He sent fictional daily stock prices based on student coin flips to the analyst to see if it would be a good time to invest. The analyst never asked what companies the price history was from but became very excited about the opportunity to invest in a few of them. When Smith informed him that they were only coin flips, he was disappointed. He was not disappointed that his approach found false opportunities in noise but that he could not bet on his predictions. He was such a firm believer in his technical analysis that he actually believed he could predict future coin flips.

Automated stock-trading systems, similar to AI, are not concerned with real world companies; the buy and sell decisions are based entirely on transitory patterns in the price and the algorithms are tuned to the primarily meaningless noise of historical data. I wondered why, if stock trading systems are garbage, investment companies spend billions of dollars on trading centers as close to markets as possible. Smith explains this as well: they want to exploit tiny price discrepancies thousands of times per second or to front-run orders from investors and effectively pick-pocket them. This single-minded pursuit of a narrow goal without concern for the greater good is unfortunately also a feature of AI. The mindless world of high-frequency trading, both when it is profitable (exploiting others) and when it is not (making baseless predictions based on spurious patterns), serves as an apt warning about the future that awaits other industries if they automate their decision-making.

Gary Smith draws a clear distinction between post-hoc justification for patterns found rummaging through data and the formation of reasonable hypotheses that are then validated or refuted based on the evidence. The former is unreliable and potentially dangerous while the latter was the basis of the scientific revolution. AI is built, unfortunately, to maximize rummaging and minimize critical thinking. The good news is that this blind spot ensures that AI will not be replacing scientists in the workforce anytime soon.

There Are No Shortcuts

If you have read other books from Gary Smith, you know to expect many easy-to-follow examples that demonstrate his ideas. Physicist Richard Feynman once said “If you cannot explain something in simple terms, you don’t understand it.” Smith has many years of teaching experience and has developed a rare talent for boiling ideas down to their essence and communicating them in a way that anyone can understand.

Many of the concepts seem obvious after you have understood them. However, do not be fooled into believing they are self-evident. An abundance of costly failures have resulted from people who carelessly disregarded them. Consider the following pithy observations…

We think that patterns are unusual and therefore meaningful.

Patterns are inevitable in Big Data and therefore meaningless.

The bigger the data the more likely it is that a discovered pattern is meaningless.

You see at once the danger that Big Data presents for data-miners. No amount of statistical sophistication can separate out the spurious relationships from the meaningful ones. Even testing predictive models on fresh data just moves the problem of finding false associations one level further away. The scientific way is theory first and data later.

Even neural networks, the shining star of cutting edge AI, are susceptible to being fooled by meaningless patterns. The hidden layers within them make the problem even worse as they hide the features they rely on inside of a black box that is practically impossible to scrutinize. They remind me of the witty response from a family cook responding to a question from a child about dinner choices: “You have two choices: take it or leave it.”

The risk that data used to train a neural nets is biased in some unknown way is a common problem. Even the most sophisticated model in the world could latch on some feature, like the type of frame around a picture it is meant to categorize, and become completely lost when new pictures are presented to it that have different frames. Neural nets can also fall victim to adversarial attacks designed to derail them by obscuring small details that no thinking entity would consider important. The programmers may never figure out what went wrong and it is due to the hidden layers.

A paper was published a couple days ago in which researchers acknowledged that the current approaches to AI have failed to come close to human cognition. Authors from DeepMind, as well as Google Brain, MIT, and the University of Edinburgh write that “many defining characteristics of human intelligence, which developed under much different pressures, remain out of reach for current approaches.”[3] They conclude that “a vast gap between human and machine intelligence remains, especially with respect to efficient, generalizable learning.”

The more we understand about how

Artificial Intelligence currently works, the more we realize that

‘intelligence’ is a misnomer. Software developers and data scientists have

freed themselves from the original goal of AI and have created impressive

software capable of extracting data with lightning speed, combing through it

and identifying patterns, and accomplishing tasks we never thought possible. In

The AI Delusion, Gary Smith has

revealed the mindless nature of these approaches and made the case that they

will not be able to distinguish meaningful from meaningless any better than

they can identify what ‘it’ refers to in a tricky sentence. Machines cannot

think in any meaningful sense so we should certainly not let them think for us.

[1] Guizzo, Erico. “IBM’s Watson Jeopardy Computer Shuts Down Humans in Final Game.” IEEE Spectrum: Technology, Engineering, and Science News. February 17, 2011. Accessed November 05, 2018. https://spectrum.ieee.org/automaton/robotics/artificial-intelligence/ibm-watson-jeopardy-computer-shuts-down-humans.

[2] My Cousin Vinny. Directed by Jonathan Lynn. Produced by Dale Launer. By Dale Launer. Performed by Joe Pesci and Fred Gwynne

[3] Peter W Battaglia, Jessica B Hamrick, Victor Bapst, Alvaro Sanchez-Gonzalez, Vinicius Zambaldi, Mateusz Malinowski, Andrea Tacchetti, David Raposo, Adam Santoro, Ryan Faulkner, et al. “Relational inductive biases, deep learning, and graph networks.” arXiv preprint arXiv:1806.01261, 2018.