I just completed Berkeley’s enjoyable DATASCI W201 class “Research Design and Applications for Data Analysis” and figured I might as well share a few of my essays since I crafted them with such tender-loving care. Enjoy!

Week 5 – Up Is Up by Jay Cordes – Tuesday, 07 June 2016, 09:22 AM

At my last job, there were many examples where decision makers took questionable facts for granted. “Up is up” was even a favorite saying of one of the executives, and I would often have back and forth exchanges with him in meetings when I thought he was being duped by data.

In one case, practically everyone was fooled by a case of regression towards the mean. My business unit designed and conducted experiments to determine which web page designs maximized ad revenue for our customers, who collect domain names. Invariably, some of their domains would perform worse than others, and it was decided that we should try to optimize those “underperformers” by hand (typically, we’d use a generic design across the board). Being scientifically rigorous and only working on a random half was considered overkill.

My friend Brad was the one tasked with actually making the modifications and was given the list of domain names each week that could use help. Every single time he hand-optimized a set of domains, the revenue on those sites would significantly increase the following day (around 30-40% if I recall). He was praised by customers and managers alike who started to wonder why he’s not hand-optimizing all of the domains on our system. Brad was savvy enough to be skeptical of results and smiled knowingly when I pointed out that there’s practically no way for him to fail since the worst domains would be expected to improve anyway just by chance.

Well, one time he forgot to work on the set of domains given to him. The next day, their total revenue skyrocketed by about 40% and the customer wrote “whatever you did worked!” Since the increase was at least as much as we’d seen with his hand-optimizing, it was now realized that he could actually have been harming revenue. If we hadn’t assumed that things could only get better and used a randomized control (selected from the domains he was told to optimize), we would’ve clearly seen whether or not his work was having a benefit. The moral to the story is: up is definitely not always up.

Week 7 – Phones Don’t Cause Cancer, The World is P-Hacking by Jay Cordes – Tuesday, 21 June 2016, 06:46 PM

The article, “Study finds cell phone frequency linked to cancer,” (http://www.mysuncoast.com/health/news/study-finds-cell-phone-frequency-linked-to-cancer/article_26411b44-290a-11e6-bffc-af73cd8bda5f.html) reports that a recent study shows that cell phone radiation increases brain tumors in rats. It also provides safety tips, such as keeping phones away from your ear.

The problem with the article is subtle: the failure to recognize the likelihood of spurious results due to repeated testing. “P-hacking” refers to when researchers hide or ignore their negative results. It’s similar to editing a video of yourself shooting baskets and only showing a streak of 10 consecutive makes. This study wasn’t p-hacked, but the result should still be regarded with skepticism due to the number of other studies that did not find similar evidence. In other words, global p-hacking is occurring when articles focus on this experiment in isolation.

Another way to think of p-hacking is in regards to “regression toward the mean”, which occurs because (1) luck almost always plays some role in results, and (2) it is more likely to be present at the extremes. A small amount of luck will lead to future results that are slightly less extreme. However, if the results are due entirely to luck, they will evaporate completely upon re-testing. With many failed attempts comes a higher likelihood that luck played a big role in the successes.

The article doesn’t recognize that results from other studies should weaken the confidence you should have in this one. It also mentions that the radiation is non-ionizing, but doesn’t note that only ionizing radiation (high frequency electromagnetic radiation including ultraviolet, x-ray, and gamma rays) has been shown to cause mutations. From a purely physical standpoint, cancer-causing cell phone radiation is very unlikely. Also, no mention is made of the fact that cancer rates haven’t skyrocketed in response to the widespread cell phone usage around the world.

The only criticism I have for the experiment itself is the fact that the results were broken down by male/female. What explanation could there be for why females were not affected by radiation? Would the result have been significant if the sexes were combined? A couple “misses” do seem to have been edited out.

I chose this blind spot to discuss because I believe it scores a perfect 10 in terms of its pervasiveness and negative impact on interpreting results. At my last job, we ran a randomized controlled test on web pages of a few different colors and found no significant difference in revenue. My boss then recommended that we look at the results by country, which made me wince and explain why that’s a data foul. An analyst did look at the results and found that England significantly preferred teal during the experiment. Due to my objections, we thankfully did not roll out the teal web page in England, but kept the experiment running. Over the next few weeks, England appeared to significantly hate teal. It pays to be skeptical of results drawn from one of many experiments.

Week 10 – Jay Cordes: Visualizations Can Affect Your Intelligence by Jay Cordes – Monday, 11 July 2016, 11:33 PM

The worst visualization I’ve ever seen actually makes you dumber for having seen it. It’s this one from Fox News evidently attempting to show that global warming is not a thing…

Figure 1 – Wow, this is nonsense.

It’s not bad in the sense of Edward Tufte’s ”chartjunk” (there’s no graphical decoration at all). It’s also not violating the idea of data-ink maximization (there’s actually not enough ink used, since there’s no legend explaining what the colors represent). It’s bad because:

- It’s flat out nonsense. It turns out that the map on the left shows whether temperatures were higher or lower than normal the prior March, while the map it’s being compared to is the absolute minimum temperature on a particular day.

- Even if the chart were actually comparing what it pretends to be, it would still be meaningless. Can a one month exception cast doubt on a decades long trend?

- If the colors on the map on the left map actually meant what they appear to mean, then we are supposed to believe that Minnesota hit 100 degrees in March of 2012? When it did set the record for the hottest average March temperature in history, but it was only 48.3 degrees.

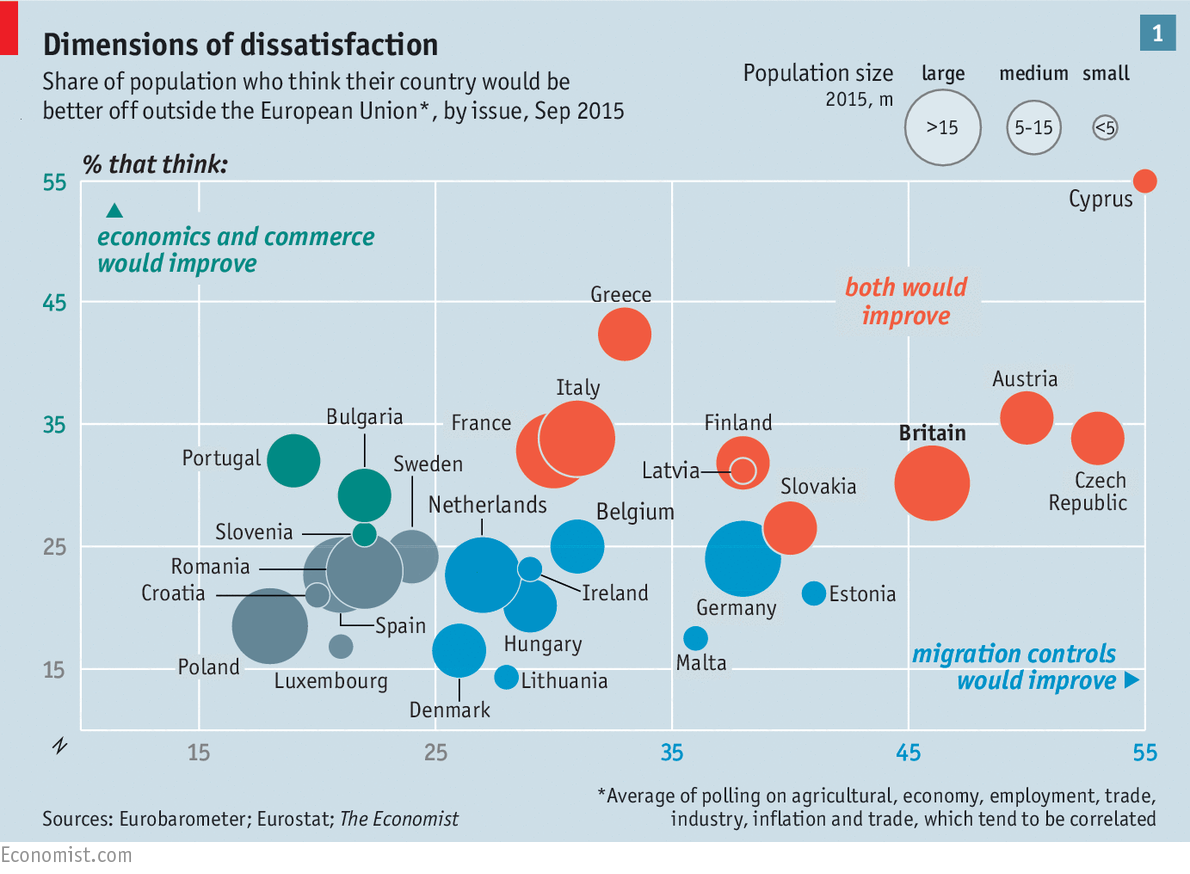

On the other hand, one of the best charts I’ve seen is this one from the Economist that we used in our presentation on Brexit.

Figure 2 – Now we’re learning something

There’s a density of information that is presented very simply and efficiently: Which European countries believe they’d be better off outside the European Union? Is it more that they think their economics and commerce would improve or their migration controls? What are their population sizes? Why did England vote to leave? Unlike the Fox News chart, the data is presented truthfully and clearly. Answers to all of these questions can be quickly drawn from it. I also like the subtle details, such as making it clear what the colors of the circles represent by simply coloring the font of the axis labels. The grid lines are white on a light gray background to avoid cluttering up the chart. Also, the population sizes are rounded to one of three values to simplify comparison. This chart definitely follows the idea of data ink maximization.

Sources: http://mediamatters.org/blog/2013/03/25/another-misleading-fox-news-graphic-temperature/193247 http://www.economist.com/news/briefing/21701545-britains-decision-leave-european-union-will-cause-soul-searching-across-continentand http://files.dnr.state.mn.us/natural_resources/climate/twin_cities/ccmarch1981_2010.html http://shoebat.com/wp-content/uploads/2016/06/20160702_FBC814.png http://m5.paperblog.com/i/48/483755/every-day-is-april-fools-at-fox-news-L-byleIS.jpeg